Three industry-oriented use cases are being addressed in NimbleAI related to medical imaging, autonomous driving and eye tracking.

An additional research-oriented use case is being address related to the use of biologically-plausible and size-effective neural networks.

Please reach out if you think you have a use case that could benefit from NimbleAI technology.

Hand-held and battery-powered medical imaging device

Diabetec retinopathy is a leading cause of vision-loss globally. ULMA’s hand-held and battery-powered U-RETINAL medical device captures fundus images and runs convolutional neural networks for early diagnosis of this disease. Currently used convolutional neural networks have around 100 M parameters and run on a GPU.

A major objective of this use case is to explore how much virtual networks implemented in NimbleAI can augment the effective neuron count in inference engines to run complex convolutional neural networks, such as those used in U-RETINAL. This use case will thus focus on testing the processing and inference engines in the NimbleAI architecture and exploring their versatility to also work with conventional frame-based image sensors. Further, the use case will measure energy-efficiency improvements brought about by NimbleAI technology w.r.t. GPU.

Smart monitors with 3D perception for highly automated and autonomous vehicles

Smart Monitors (SMs) are proposed to continuously monitor the environment and state of a vehicle in 3D, including the car itself, its driver, and their safety, reliability, and security. SMs are to be implemented independently of the car’s main control system, to avoid common-cause failures, and to relax the safety requirements for SMs as they will not be part of the main car safety function. However, implementing SMs with state-of-the-practice devices may double energy consumption, increase costs, and reduce the range of electric cars by up to 30%. We, therefore, plan to drastically reduce the SMs energy footprint by using NimbleAI-based devices. This will allow us to install many SMs to increase the overall redundancy, and thus contributing to also increase the system safety.

SMs will autonomously analyse the 3D automotive environment from different viewpoints and gain an understanding of the overall situation. These results will be shared by all SMs to make final decisions on whether the vehicle remains in its operational design domain and the driving decisions taken by the car’s control system are safe.

Wearable eye tracking glasses

Eye tracking provides one of the most intuitive, seamless interfaces between a human and a machine. Furthermore, it is the key to an abundant source of data about the environment and the person itself. The VPS 19 eye tracking-glasses from Viewpointsystem are ergonomically designed to provide maximum comfort to the user, weighing 43 grams in total.

In addition to the glasses, the VPS 19 system needs a GPU-powered hand-held computer to perform complicated calculations and ensure maximum performability. To reduce the weight of the glasses and increase their usability, as well as to reduce dependency on the support computing device, we will investigate the effect and advantages of NimbleAI architecture and event-based processing on eye tracking algorithms, compared to frame-based image processing.

Our goal is to explore the potential of this technology to develop an efficient and independent eye tracking module that is less dependent on external computing power and can be integrated into other devices that benefit from eye tracking data.

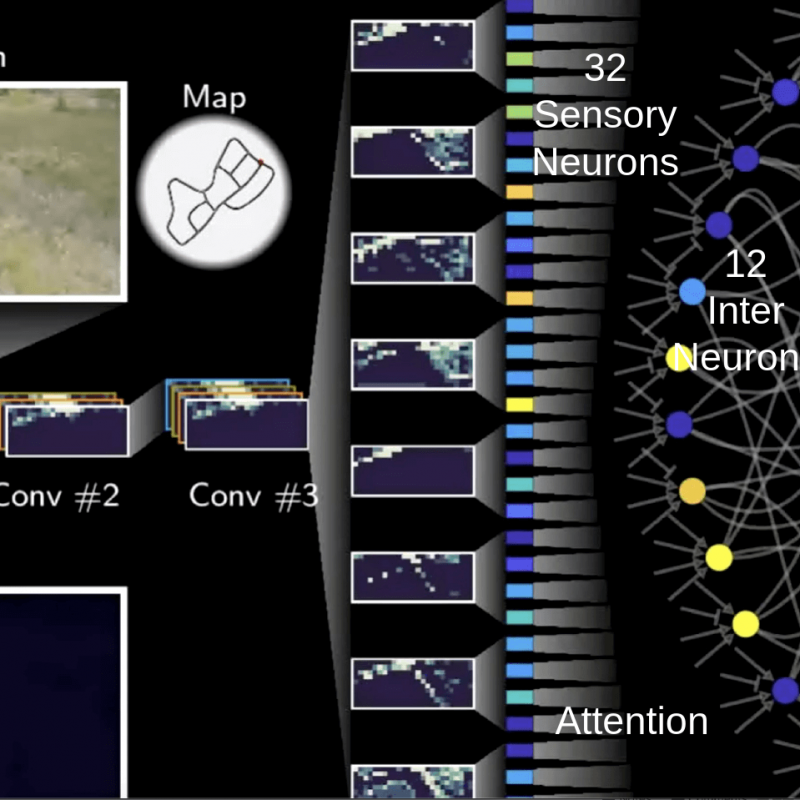

Human attention for worm-inspired neural networks

Industry standard neural networks are big and complex even to solve simple problems. This makes verification and interpretation nearly impossible, raising concerns about their use in safety-critical applications such as autonomous driving.

In this use case TU Vienna will explore more biological-plausible neural networks that implement complex functionality using a reduced amount of neurons. This approach is inspired by the nervous system of C. Elegans worm and results in a smaller model that can be better understood, making it easier to reason about its correctness (see the Nature Machine Intelligence paper: Neural circuit policies enabling auditable autonomy). One of the intended uses of this novel neural network is to power the Smart Monitors (SMs) proposed in the autonomous driving use case above with the objective of identifying attentional targets on the road.

A major objective of this use case is to demonstrate the ability of the NimbleAI post-silicon flexible architecture to host and run efficiently unconventional neural networks and models that are being proposed by the research community as AI continues to evolve at tremendous pace. By using the hardware acceleration and event-based sensing and processing of the NimbleAI stack we expect to “run a worm brain with human attention on a visual sensor”.